By David Bruno

The world’s largest social media platform could face a future of tighter regulations and more stringent rules.

Facebook (now known as Meta) and its various social media platforms (including Instagram, WhatsApp and the core Facebook product) host more than half of the world’s population. This scale provides the tech giant unprecedented corporate, social, and, most importantly, political power in the social networking space. Amid the amount of power and influence the app wields, it’s been the subject of accusations for failing to prevent the spread of misinformation and inflaming tensions worldwide. However, it is the role that the app has played in impacting politics around the globe that is sending shockwaves and concerns among various regulatory spheres.

It’s becoming increasingly clear that the app often takes sides during political campaigns, which has not gone well with world powers and the masses worldwide. Its decision on whom to silence during heated political confrontation has usually got it into trouble.

A great divide is always a common occurrence during periods of sensitive debates around Facebook. The divide is often characterized by activists on the left urging the company to take more drastic measures against those that allegedly propagate harmful content. On the other hand, activists on the right have always accused the company of direct censorship at conservatives.

By taking sides, Facebook has always found itself straying against its public commitment to neutrality as always pushed by Chief Executive Officer Mark Zuckerberg. Former Facebook director of global public policy Matt Perault believes the network commitment to neutrality is no longer a reality but a façade slowly slipping away.

Outrage and Bitter Debates

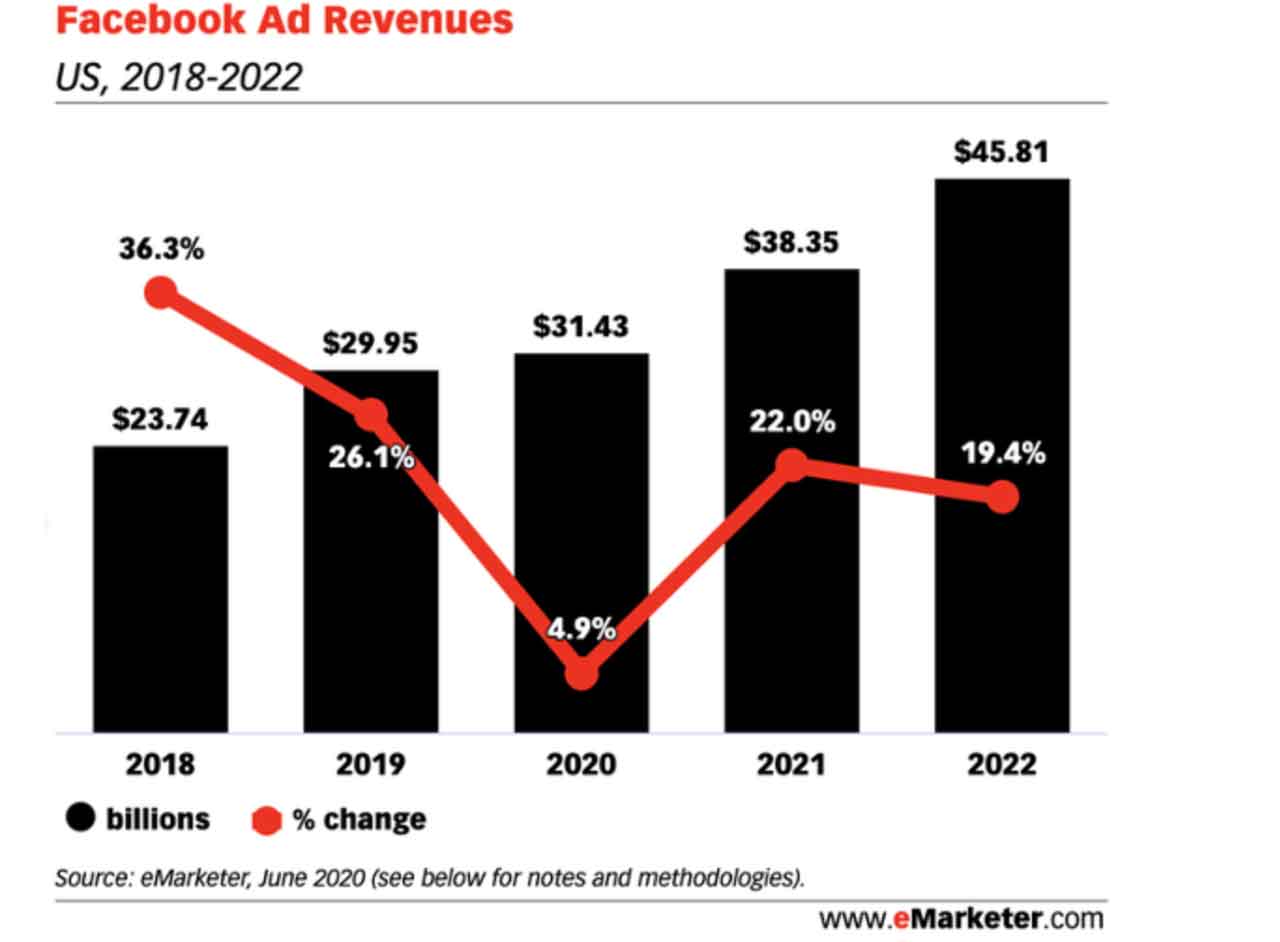

By taking sides, Facebook has often triggered a big divide, something that might as well be entrenched in its core business. It is important to note that the company makes 98% of its revenue from the highly lucrative advertising business. For advertisers to be convinced about advertising in the network, engagement levels must be extremely high compared to other platforms.

Source: Statista.com

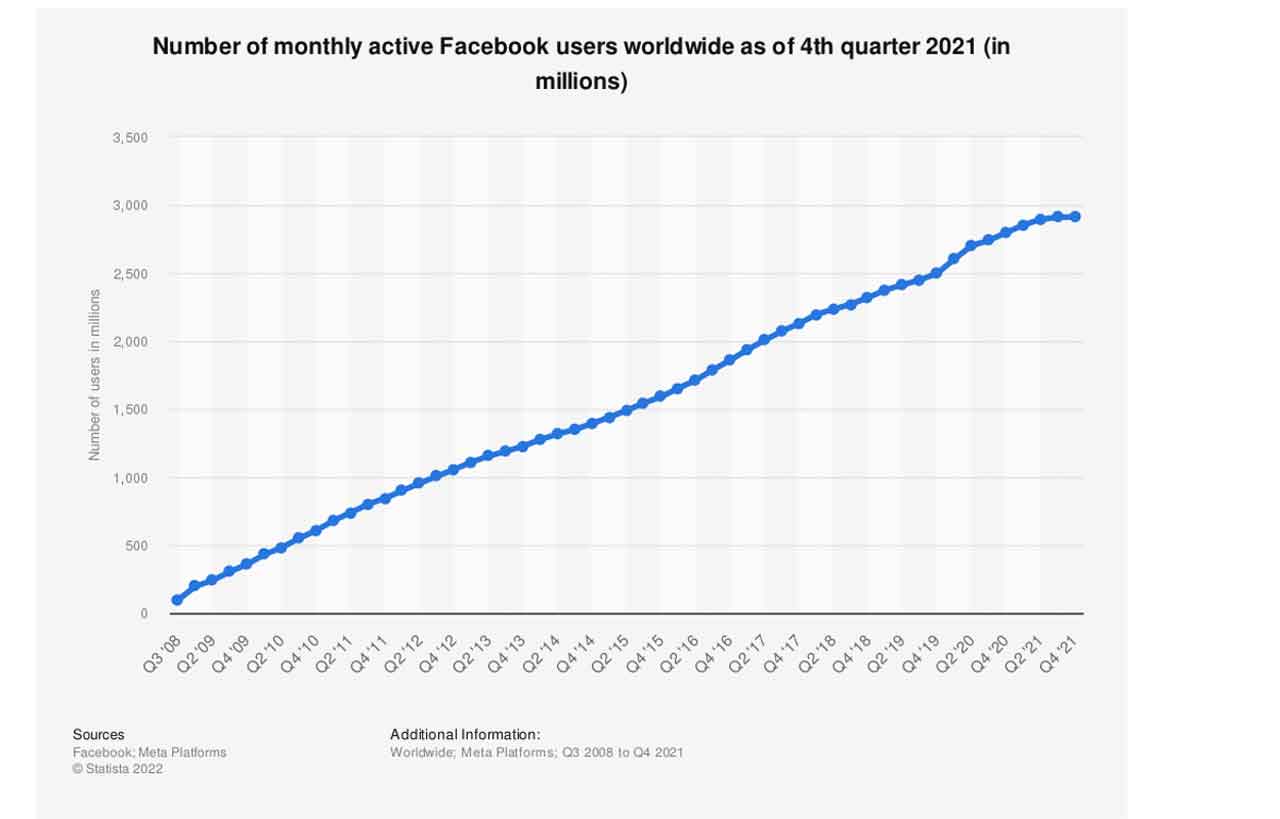

While Facebook boasts billions of users and more at 2 billion active users every month, finding a way to bolster engagement levels has always posed a big test that has also plunged it into trouble. From a deep look into the company’s business model, it is clear that dividing users apart on various discussions does more good in attracting more users into the platform and driving engagement levels higher. [9]

Facebook has succeeded in creating filter bubbles whereby social media algorithms are relied upon to increase engagement levels by all means. Triggering inflationary content or discussions has in the recent past turned out to be the most effective way of getting people to spend more time on the apps glued to the heated discussions.

Source: Businessisnider.com

As people engage in unending bitter discussions, Facebook app engagement levels have increased, allowing the company to air advertisements and generate top dollars from big brands. It’s no longer a secret that the social networking app benefits greatly from the proliferation of extremism, bullying, and hate speech known to trigger unending discussions on the app.

Facebook would struggle to bolster engagement levels if it stuck to a business model focused on providing accurate information and diverse views. Therefore, it would rather stick to inflammatory content capable of keeping people glued to the app for long to attract big advertising campaign dollars.

Facebook Business Model Political Impact

While Facebook’s divisive business model has gone a long way in widening the gap between the right and the left, it is the political impact that is sending shockwaves globally. In 2012, before political influence became a hot subject, the Obama campaign, used the app to register voters and encourage people to get out and vote.

Fast forward, the app has been optimized to do more than encourage people to get out and vote. In an attempt to bolster engagement levels and attract more active users, the app has been used to sway people to vote. The 2016, the US presidential election painted a clear picture of how the app was used by agents sponsored by the Russian government to influence the presidential election outcome.

Increased focus on enhancing engagement levels at all costs has also plunged Facebook into big trouble. The social networking company has already been sued for allegedly fueling the genocide of Rohingya Muslims in Myanmar. It is alleged that the company’s algorithms ended up amplifying hate speech on the platform rather than taking down inflammatory posts.

The company currently faces claims worth more than £150 billion in a case filed in court in San Francisco. In the lawsuit, Facebook is accused of focusing on bettering market penetration in the country rather than clamping down on hate speech that sparked genocide[2]. Facebook actions in Myanmar all but attest to how social media is “socially taking us backward” with the complicit silence putting profit over what is right:

In India, WhatsApp has also emerged as a powerful tool used to sway voters on how to vote [5]. The app with more than 250 million users has been used in recent elections to propagate misinformation such as dark warnings about Hindus being murdered by Muslims, all in the effort of swaying voting patterns.

WhatsApp has also been used by Indian political parties, religious activists and others to send messages to more than 49 million voters. A point of concern is that some of the messages have only inflamed sectarian tensions while others used to propagate complete falsehood with no track from where they originated. WhatsApp has escaped scrutiny because it is mostly used outside the US.

Social media’s role in Russia/Ukraine War

Alarming images out of the ongoing Russian invasion of Ukraine already paint a clear picture of the kind of impact social media networks could have in impacting people’s perceptions [14]. Misleading and manipulated information trying to portray one side having an edge has become a common occurrence in Facebook, Twitter, and other networks.

Visuals have become the preferred way of propagating a given theory, given their persuasive potential and attention-grabbing nature. Disinformation campaigns are being fueled by some of the social networks given their ability to sow divisions and influence engagement levels between parties, thus bolstering the core business[13].

Facebook’s role in the region highlights just how impactful this platform is and how deeply entrenched it could be. While Facebook properties, such as WhatsApp, have served as an essential tool for people to contact loved ones trapped in the midst of the conflict[6][12], they’ve also been used to spread misinformation, propaganda, and death threats on both sides. In fact, Facebook took the unusual move of allowing death threats on the platform that were limited to Russians[7]. Setting aside the moral implications of this, it highlights the power this corporation (which is ultimately controlled by one individual) has on the world’s most consequential military battle.

Unsurprisingly, the moves convinced Russian authorities to ban Facebook across the nation. However, the ban was limited to Facebook’s core platform and Instagram and did not include WhatsApp. Estimates suggest that roughly 84 million Russians, a significant portion of the population, use WhatsApp for essential communications[11]. That could be the reason it was left out from the ban, further highlighting how difficult it is for countries to regulate the platform[13].

Social Media Regulations and Government’s Role

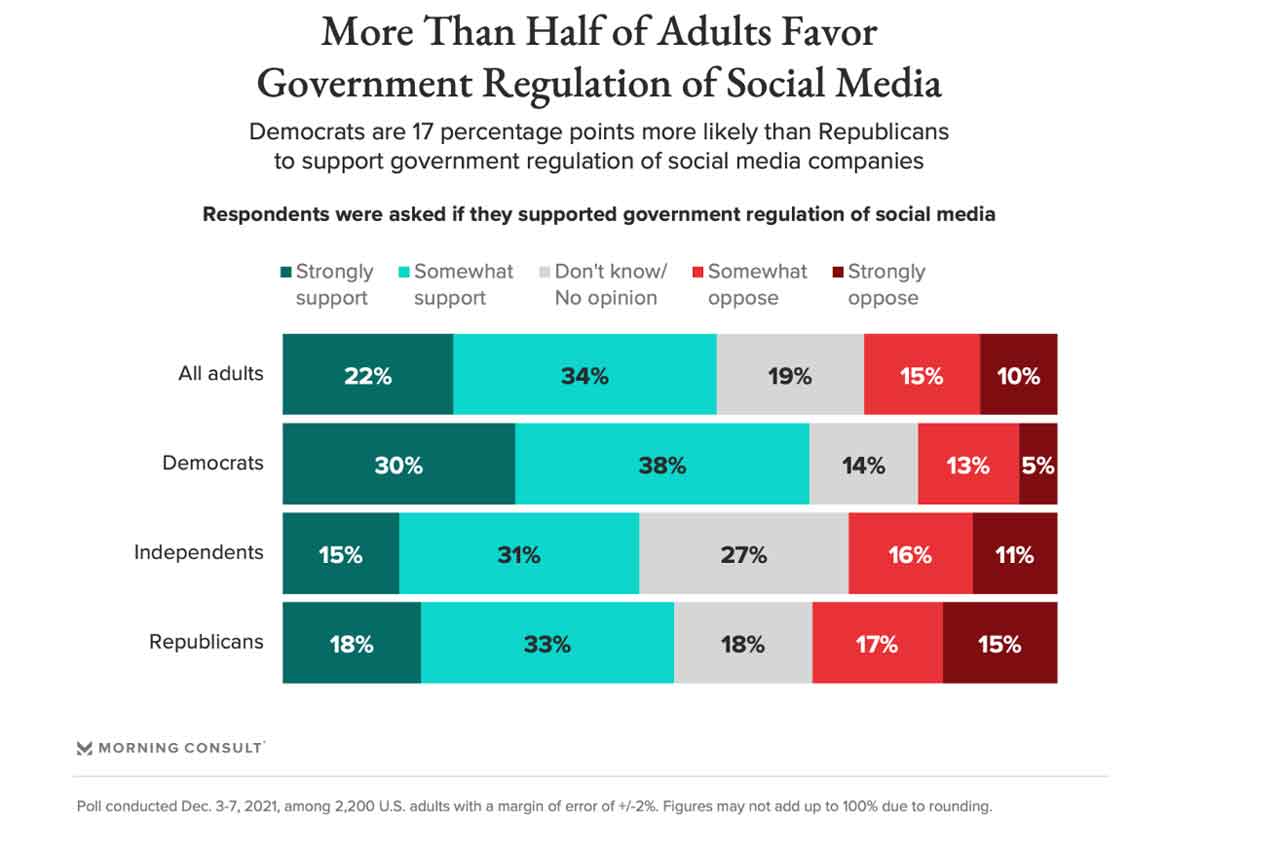

Faced with mounting pressure from the populous and lawmakers, social networks have started acting in the fight against misinformation or misleading content. Twitter and Facebook banning former US President Donald Trump from their platforms for allegedly sharing misleading information and inflaming millions of his followers is a clear indication they can act when pressed.

Source: Morningconsult.com [10]

Bans and censorship are turning out to be preferred measures put in place to clamp down on misinformation and content only aimed at inflaming the masses. However, there is an acknowledgment that simply banning people won’t do much in addressing the deep-rooted issues that lead to events such as the one experienced at the US Capitol Hill on January 6, 2021

One of the most touted solutions would be social media networks focusing their efforts on protecting against algorithmic political polarization in the first place. Taking down one actor or political cause does not solve anything but worsens the situation.

The US government has already set sights on Section 230 of the Communications Decency Act[1], which protects internet companies from liability on user-generated content disseminated on their platforms. Legislations that protect the general public from harmful content have been mooted.

Some of the legislation under consideration could require Facebook and Twitter to meet certain standards in transparency, and data protection. Social media being held liable for any misinformation or content shared on the platforms to cause damage could have some impact.

Tighter regulations will be a game-changer to clamp down misinformation or misleading content on social networks. While it is expected to protect the public, it would be bad for Facebook. The giant social network will have to develop new ways to keep people engaged longer on the app to attract the top advertising dollars.

Meanwhile, regulations in Canada have lagged behind. Natasha Tusikov, an assistant professor at York University and author of Chokepoints: Global Private Regulation on the Internet says the Canadian government has lagged behind other developed countries in tightening regulations on social media platforms[4]. While Austria, Germany and the United Kingdom have recently introduced new measures to tackle hate speech and disinformation online, Canada is still considering, but not actually implementing, new rules.

The current Government under Prime Minister Justin Trudeau, has brought forward two new bills in this arena[14]. Bill C-10 was structured to modernize the Broadcasting Act, while Bill-C36 was designed to provide recourse for users who faced online bias, hate, and prejudice[8]. These bills were created after years of committee investigations and studies. However, they were stalled when the Parliament was dissolved in 2021.

While the government promises to revive them, Tusikov has said that even these measures don’t address the root of the problem: the business model of social media. Social platforms thrive on user engagement, which drives retention, viewership and conversions for the platform’s true customers: advertisers. While mainstream advertisers have pulled back from toxic online content, in general, the engagement rate is the key factor considered for most campaigns. Put simply, social media’s revenue relies on retaining attention, which indirectly encourages extremist views and sensational content on the platforms[3]. Fact-checking and moderation are disincentivized by this model.

Unless governments can seriously regulate the social platforms and put guardrails on their business model, the creation and dissemination of toxic content isn’t likely to decline.

Digital Charter

On June 16, 2022, the federal government introduced Bill C-27, Digital Charter Implementation Act, 2022. If passed, the potential Digital Charter could greatly enhance the security of internet users across the country while streamlining the flow of information across our digital platforms.

Besides regulating the way personal information is handled by corporations, and how enterprises can use artificial intelligence, the Charter includes the following proposals:

- Greater transparency about how personal information is stored and managed by corporations.

- Freedom to move personal data from one corporation to another with ease.

- The right to withdraw consent or request the destruction of personal data when it is no longer necessary for use by the corporation.

- Fines of up to 5% of revenue or $25 million, whichever is greater, for the most serious offenses – the most stringent fines in the G7

- Standards for the development and management of AI-enabled systems.

If implemented, these standards and protocols could vastly improve the digital landscape in Canada. No longer would tech giants from across the border be in charge of Canadian lives and their access to vital information. The negative impacts of social platforms controlled by Meta Platforms, TikTok, Google, Reddit, and Twitter could be somewhat mitigated. While emerging threats from the misuse of AI and data-driven business models could also be reduced.

To enforce these standards, Bill C-27 greatly enhances the powers of the Office of the Privacy Commissioner (OPC). If passed in its current form, the bill could allow the OPC to demand changes from an organization. It could also allow the OPC to publicize these changes to offer members of the public greater transparency. Under the Bill’s recommendations, the OPC can seek and approve Codes of Conduct related to these data compliance requirements.

To process penalties, Bill C-27 recommends the creation of a new Data Protection Tribunal (Tribunal). This tribunal could have the power to act on recommendations from the OPC and implement penalties of a maximum of $10,000,000 or 3% of gross global revenue for non-compliant organizations.

In summary, the federal government’s proposed bill would enhance data protections by making consumer consent the primary driver of data collection. However, to reduce the burden on individual consumers, the bill makes organizations responsible for transparency and compliance while instituting a new tribunal to enforce penalties.

It remains to be seen if this bill passes into law. But if implemented, it could have a substantial impact on the way Canadians use digital platforms and the business model of providing these platforms to Canadians.

Conclusion

As the largest social network conglomerate in the world, Meta Platforms (formerly known as Facebook), has an outsized influence on online discourse. While the company’s apps have helped connect people across the world, they’ve also served as potent tools for misinformation, hate speech, and extremism.

Unfortunately, these elements are a core part of the social media business model. The more extreme and sensational the content the better the retention and engagement from users, which ultimately leads to more conversions for advertisers. This vicious cycle has helped the companies accrue wealth, but have spread the disadvantages across the globe.

In most countries, the disadvantages could outweigh the advantages. Countries deal with the consequences of political interference, misinformation, hate speech, and extremist ideology but don’t benefit from the tax revenue on sales or revenue generated by these American conglomerates. This means most countries have a clear path to more regulation and tighter rules on these platforms. Streamlining online content is in their best interest.

Regulators in Austria, Germany and the United Kingdom have already taken bold measures to tackle the issue, while the Biden Administration is considering a similar slew of new regulations. However, regulators in Canada have lagged behind. New bills have been introduced and discussed, but until these bills address the core issue or get passed into actual law Canadian internet users are at a disadvantage to their global peers.

Nevertheless, the tide seems to be turning and Facebook (along with other social media giants) could face a future with tighter rules and more stringent controls on their operations.

About David Bruno:

As founder and former CEO of a global cyber security firm, David specialises in anti-fraud and anti-corporate espionage systems worldwide. He provides financial sector solutions for the digital and interactive FINTECH sectors, and for over 20 years he has worked to provide security protections to the masses and has invested his own money in a free E2EE encrypted email server for the public. He is a contributor and member of Electronic Frontier Foundation (EFF) advocating for defending digital civil liberties. He is a Tech Policy Analyst for Washington DC based Global Foundation For Cyber Studies & Research (gfcyber.org) and a contributor to the Northern Policy Institute dedicated to educating the public on the surveillance of email in general and the importance of encryption, especially for vulnerable populations like refugees. He was also a policy contributor to Canada’s new Digital Charter, re-introduced in 2022 by Minister François-Philippe Champagne.

Copyright © 2022

Sources

- Are we entering a new era of social media regulation? Harvard Business Review. (2021, December 13). Retrieved April 1, 2022, from https://hbr.org/2021/01/are-we-entering-a-new-era-of-social-media-regulation

- Business Insider. (n.d.). Facebook has been sued for over $150 billion for allegedly fostering a 10-year genocide. Business Insider. Retrieved April 1, 2022, from https://africa.businessinsider.com/news/facebook-has-been-sued-for-over-dollar150-billion-for-allegedly-fostering-a-10-year/qt7x7kr

- (2020, June 23). Facebook ad revenue in 2020 will grow 4.9% despite the growing number of brands pulling campaigns. Business Insider. Retrieved April 1, 2022, from https://www.businessinsider.com/facebook-ad-revenue-growth-2020?r=US&IR=T

- Gilmore, R. (2022, January 13). The Dark Side of social media: What Canada is – and isn’t – doing about it – national. Global News. Retrieved April 1, 2022, from https://globalnews.ca/news/8503534/social-media-influenced-government-regulation/

- Goel, V. (2018, May 14). In India, Facebook’s whatsapp plays central role in elections. The New York Times. Retrieved April 1, 2022, from https://www.nytimes.com/2018/05/14/technology/whatsapp-india-elections.html

- Guardian News and Media. (2021, December 6). Rohingya sue Facebook for £150bn over Myanmar genocide. The Guardian. Retrieved April 1, 2022, from https://www.theguardian.com/technology/2021/dec/06/rohingya-sue-facebook-myanmar-genocide-us-uk-legal-action-social-media-violence

- Hatmaker, T. (2022, February 27). Russia says it is restricting access to facebook in the country. TechCrunch. Retrieved April 1, 2022, from https://techcrunch.com/2022/02/25/russia-facebook-restricted-censorship-ukraine/?guccounter=1&guce_referrer=aHR0cHM6Ly93d3cuZ29vZ2xlLmNvbS8&guce_referrer_sig=AQAAABOdnkte7FdDE0QE_-kU3KzSQwixu-Y5rPC-WFnD79dsqO3424yLYYB81iFKFb5MPXTHT4t7wQKS73LTNHFxtvG-0ZE7kVc-LKI0vQ6DfR3Rphi76rGHNCE1HMiEHrmE3lOp3qUrEmzIuvyQjJyEz1YLiAU0_bMGNiUBzXIfVdt8

- Heritage, C. (2022, March 30). Government of Canada. The Government’s commitment to address harmful content online – Canada.ca. Retrieved April 1, 2022, from https://www.canada.ca/en/canadian-heritage/campaigns/harmful-online-content.html

- Lauer, D. (2021, June 5). Facebook’s ethical failures are not accidental; they are part of the business model – AI and Ethics. SpringerLink. Retrieved April 1, 2022, from https://link.springer.com/article/10.1007/s43681-021-00068-x

- Lawmakers see 2022 as the year to rein in social media. others worry politics will get in the way. Morning Consult. (2022, February 4). Retrieved April 1, 2022, from https://morningconsult.com/2021/12/15/social-media-regulation-poll-2022/

- Meaker, M. (2022, March 21). Why WhatsApp survived Russia’s Social Media purge. WIRED UK. Retrieved April 1, 2022, from https://www.wired.co.uk/article/whatsapp-russia-meta-ban#:~:text=WhatsApp%20is%20hugely%20popular%20in,January%202022%2C%20according%20to%20Statista

- Service, T. N. (n.d.). Parents of stranded students in Ukraine form Support Group on WhatsApp. Tribuneindia News Service. Retrieved April 1, 2022, from https://www.tribuneindia.com/news/punjab/parents-form-support-group-on-whatsapp-373370

- Thomson, T. J., Angus, D., & Dootson, P. (2022, February 28). How to spot fake or misleading footage on social media claiming to be from the Ukraine War. PBS. Retrieved April 1, 2022, from https://www.pbs.org/newshour/world/how-to-spot-fake-or-misleading-footage-on-social-media-claiming-to-be-from-the-ukraine-war

- Trudeau blasts social media disinformation – in Russia and Canada. POLITICO. (n.d.). Retrieved April 1, 2022, from https://www.politico.com/news/2022/03/11/trudeau-takes-disinformation-fight-to-europe-00016709